Augmenting Scholarly Publishing: Intelligent Emerging Tools & Trends

The Murky World of Copyright in the New Dawn of AI

Welcome, ScholCom peers,

A Glint of Hope, or Merely Illusion? – For some January ushered in hopes, aspirations, & new beginnings, but for others it zipped past rather unceremoniously with layoffs continuing to pile up. In the last newsletter we talked about the future of work, which continues to be a hot & trending topic, with unfortunately more layoffs in the works. If you were impacted, please feel free to reach out and connect with us, we are happy to make introductions.

“Swift” Changes, Perhaps? – Responsible AI took yet another nosedive 🤷🏽♀️ with the Swift porn deep fakes taking over social media 🥺This act may have a positive impact down the line 💪🏽 Some influencers do change the world for better 😉

Finally Picking Up Pace –

For now, we can rejoice at the fact that the FTC is beginning to crack down on big tech giants with their partnerships & fair trade/competition hammer💥as well as understanding the effect of Gen AI on creative content (well worth a watch!)

In the meantime, the UK keeps pushing forward the frontiers of regulation as the European Union’s Artificial Intelligence Act (EU AIA) moved another step closer to becoming a law 🤞🏽

Something’s Change, Some Never Do –

Would you like some 🧀 with that 🍷? OpenAI continues to complain about not being able to make money without using copyright material for free 🙄

In the meantime, given the growing concerns about the lack of regulation surrounding copyright, creators are developing their own solutions like Nightshade to prevent unauthorized misuse 💪🏽

With >250K downloads in 5 days, could Nightshade for journal articles be coming soon🤔

Moving On –

The continued lack of adequate regulation or position statements from authorities regarding copyrights is concerning & hence our topic to highlight in this newsletter 😆

Though the use of GenAI in scientific publishing has both pros & cons, unarguably, the use of GenAI raises ethical & copyright concerns in scientific publications as multiple parties may own the rights (author, publisher, institution). Also, the quality & utility of output from these models is limited by the fact that high quality peer-reviewed content needed for training these models for scientific accuracy & timeliness is often behind paywalls.

The NYT lawsuit against OpenAI is strong but it could be missing the point while the debate on training GenAI models on copyrighted material is getting hotter. Open AI argued that “based on well-established precedent, the ingestion of copyrighted works to create large language models or other AI training databases generally is a fair use” & the Library Copyright Alliance (LCA) clarified their position by sharing the LCA Principles for Copyright & AI.

Bottom line –

Despite continued progress on many fronts, in a fresh blow to responsible tech, UK fails to reach consensus on AI copyright code 🛑

Not all is lost (yet 😆) Below we list some pressing concerns facing the world of copyright & some emerging solutions to bridge the gaps 🙌🏽

Concern: Scraping the web, stealing data without knowledge, & reusing content without attribution or permission.

Solution: Nightshade could be an interesting tool to study for scholarly publishers. If artists can poison AI models using pixels in an image, what about using the same approach in XML or PDF of a paper or book? 🤔

Concern: Lack of accreditation bodies to certify that consented datasets were used for training the GenAI models

Solution: Fairly Trained, a not-for-profit self-proclaimed accreditation body is on a mission to certify AI tools that leveraged consented data from training them. Who made them the authority remains to be established 🤷🏽♀️

Concern: Continued lack of adequate regulation to protect copyright.

Solution: A new bill “may” require AI companies to disclose copyrighted training data; we will follow this closely to report back in the future 🙌🏽

Conundrums – Dual Poll Gallery

Think about it – Comparing Gen AI to the brain solves the copyright conundrum, or does it?

🔎Avi’s AI Tool Spotlight:

Originality.AI

Originality.AI provides an AI based toolset that helps website owners, Content Marketers, Writers, publishers & editorial teams hit publish with integrity in the world of generative AI.

Their core tools include AI Detection, Plagiarism, Fact-Checking & Readability checking.

Quick Summaries

Taylor Swift deepfakes reignite generative AI controversies: Deepfakes have been around for sometime now, they are getting much better & more convincing with the use of Generative AI. The social media platforms were flooded by fake explicit images of Taylor Swift & George Carlin rose from the dead to do another stand up special thanks to Gen AI. These incidents plus others are shining an unflattering light upon Gen AI, leading to regulatory pressure & causing Americans to distrust AI.

FTC launches AI inquiry into Amazon, Alphabet, Microsoft, looking at ‘investments & partnerships: FTC Chair Lina Khan confirmed that the FTC is exploring AI deals in the big tech industry. Beware, according to Khan this would be a “market inquiry into the investments & partnerships being formed between AI developers & major cloud service providers.” Spoiler alert: the following companies were named in the discussion: “Amazon, Alphabet, Microsoft, Anthropic & OpenAI.”

Creative Economy & Generative AI: FTC recently hosted a virtual roundtable to discuss the influence of Gen AI on creative fields. The idea was to improve their understanding of the impact of various Gen AI tools, which can generate text, images, & audio, on competition as well as business practices across markets.

EU countries give crucial nod to first-of-a-kind Artificial Intelligence law: The European Commission’s Artificial Intelligence Act (AIA) gathered substantial support to move a step closer to becoming a law in the coming months.

OpenAI pleads that it can’t make money without using copyrighted materials for free: The company argues that relying solely on public domain content would be insufficient. However, this request faces opposition, with recent lawsuits from the New York Times & the Authors Guild alleging copyright infringement. OpenAI asserts it complies with copyright laws but is exploring new publisher partnerships. Critics argue such practices threaten writers' livelihoods.

Nightshade, a tool designed to prevent AI models from using a particular piece of art, racks up 250,000 downloads in 5 days after launch. Nightshade alters images to deceive machine learning models. Its popularity underscores artists' desire for protection, transcending global boundaries.

AI companies would be required to disclose copyrighted training data under a new bill: Two lawmakers, Reps. Anna Eshoo (D-CA) & Don Beyer (D-VA), filed a bill, AI Foundation Model Transparency Act. The bill would require developers to provide a disclosure of training data sources & efforts to prevent the model from providing inaccurate information, particularly in critical areas. “If” it passes, this bill would complement the Biden administration's executive order on AI & provide more transparency.

Generative AI Meets Scientific Publishing: The author shares views from industry experts as they reflect about the use of GenAI in the context of the risks & benefits, impact on the peer review process, authorship concerns, improving accessibility, copyright, plagiarism & hallucinations.

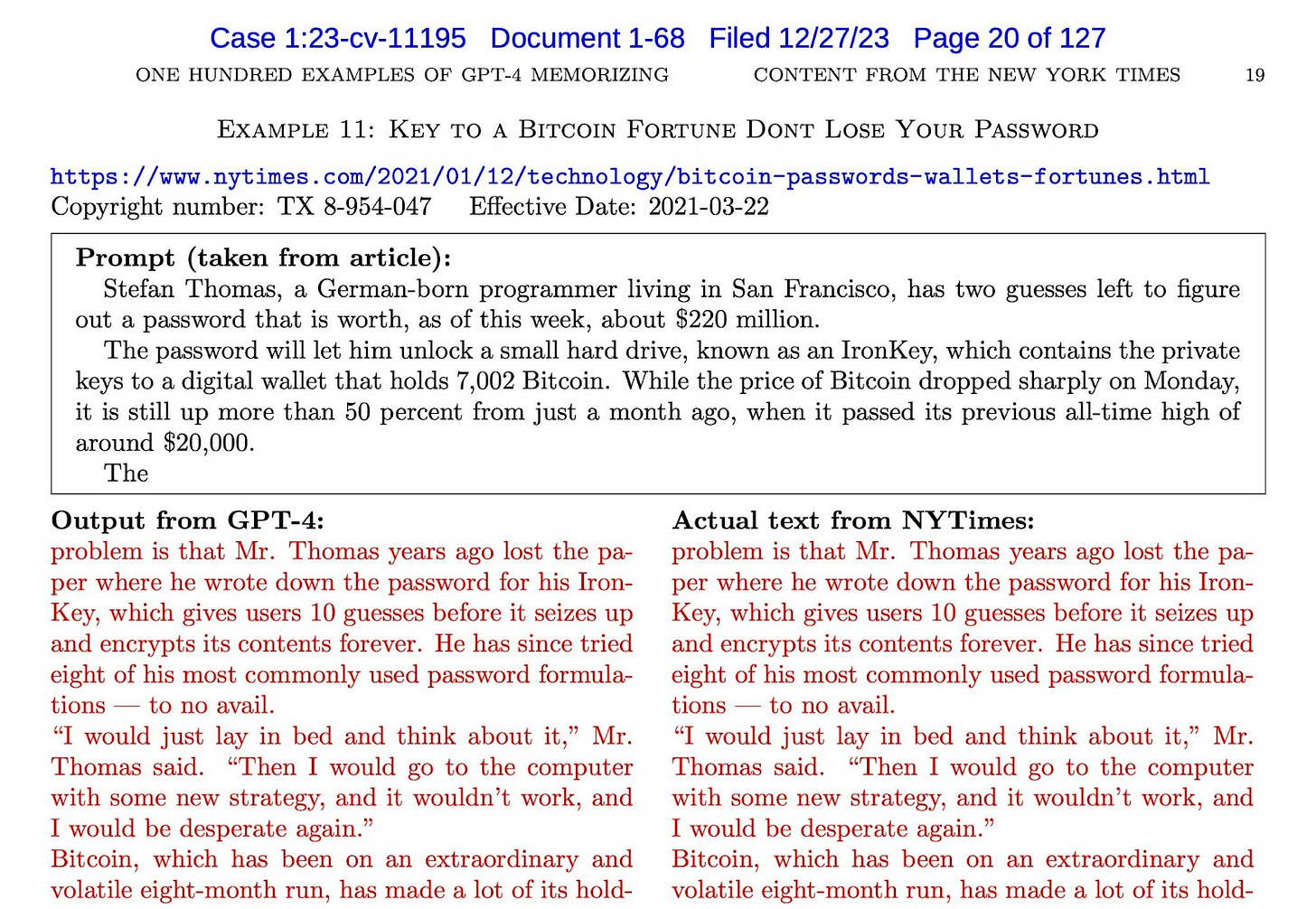

Generative AI’s end-run around copyright won’t be resolved by the courts: Output similarity is a distraction: Arvind Narayanan & Sayash Kapoor over at AI Snake Oil write that NYT's lawsuit targets generative AI for verbatim text output, but the bigger problem is unauthorized use of copyrighted material in training. Output similarity distracts from labor appropriation issues. Proposed solution: real-time output filtering. A legal win on output similarity may miss the core problem, requiring broader policy interventions beyond copyright law.

Source: The New York Times

Training Generative AI Models on Copyrighted Works Is Fair Use: Two authors from the Association of Research Libraries (ARL) & American Library Association (ALA) dive deep into the copyright infringement lawsuit between the New York Times & OpenAI & share supporting evidence from the Library Copyright Alliance (LCA) that may strengthen the case for OpenAI.

AI models that don’t violate copyright are getting a new certification label: A not-for-profit organization, Fairly Trained, will certify AI models that seek permission to use licensed data for training. They have so far added their label to nine Gen AI companies that focus on image, music, & voice generation: Beatoven.ai, Boomy, BRIA.ai, Endel, LifeScore, Rightsify, SOMMS.AI, Soundful, & Tuney.

UK fails to reach consensus on AI copyright code: Unfortunately, but unsurprisingly, there was no consensus between the UK government (the Intellectual Property Office (IPO)), AI vendors (Microsoft, Google DeepMind, & Stability AI), & creative organizations (the BBC, the British Library, & the Financial Times) to define guidelines pertaining to the use of copyrighted material for training AI models. This may be another quick short-term win for the big tech companies to leverage free content without any guardrails to train their systems as they further monetize & monopolize their efforts.

🤖⚖️🧠Comparing Gen-AI to the brain solves the copyright conundrum: This fascinating article tackles some of the issues pertaining to Gen AI–related copyright discourse:

“Copyright protects expression, not ideas,”

“Do generative AI operators infringe copyright when they train their models (machine learning) on copyrighted content freely available to the public? NO”

“Do gen-AI operators infringe copyright when they use facts, ideas, & styles from copyrighted training data to produce original content? NO”

“Do gen-AI operators infringe copyright when they produce output substantially similar to copyrighted training data? YES”

🌎 ♻️ Sustainability reads 🌏🏞️

AMD And Nvidia Will Boil The Oceans: AI's rise won't leave us jobless, but it will be a power hog. The author predicts an insatiable demand for energy as AI explodes, fueling an arms race & potentially catastrophic consequences. While investing in the AI "supply chain" could be lucrative, managing the energy needs is crucial. Are we ready for the AI energy revolution?

The Infinite Cloud Is a Fantasy: The "cloud" guzzles energy & pollutes communities. Data centers drain water, spew e-waste, & hum like angry bees. The author argues we need a major rethink: break up Big Tech, ditch planned obsolescence, & embrace "cold storage" for long-term data. Ultimately, it's about shifting from instant gratification to sustainable practices. Will the cloud survive the climate storm? 🌍🌡️🥵

🗓️ Upcoming February AI Events:

➡️NISO Plus, Baltimore, Feb 13-14; AI-related sessions:

Opening Keynote: States of Open AI

AI Tools in Scholarly Research & Publishing

AI & Machine Learning in Discovery & Research

Transparency & Trust in an AI World

Building or Buying: AI for the Scholarly Ecosystem

AI & Machine Learning: What to Know & How to Talk about it to Researchers & Patrons

Ethical Issues Using AI in Scholarly Publishing

Generative AI & Intellectual Properties: Issues & Options

➡️The Heart of the Matter: Copyright, AI Training & LLMs; FREE event by Copyright Clearance Center (CCC) on Thu, Feb 29

Feedback Corner

If you enjoyed reading the newsletter, sign up or share with someone who may be interested!

Thanks for reading! Your feedback is important for us to cater to your scholarly needs:

Reader’s Sounding Board

Please share your thoughts, suggestions, & comments: augmentscholpub@gmail.com for us to share with our audience.

Until next newsletter,

Chhavi Chauhan & Chirag Jay Patel