Augmenting Scholarly Publishing: Intelligent Emerging Tools & Trends

The Evolving World of AI Policies

Welcome, ScholCom/MedCom peers,

⬅ Reflecting Back –

📈 The growing numbers of lay-offs across industries made us reflect back on our opening newsletter for ‘24 on the future of work 🔮, especially as Turnitin begins with the layoffs while the fintech company Klarna revealed the extent of AI efficiency to potentially replace human workforce.

📢 Open AI announced a “new” Board as it continues to be challenged left, right, and center by other news outlets over AI training. This made us think (yes, yet again!) about the murky world of copyright © ️(➡️ check out the interesting poll results!)

➡️ The growing interest in a certain “rat” continues to make our last newsletter on generative AI generated scientific content 🤖 relevant & in demand 😎

⏭️ Moving On –

📢 We heard from our scholarly publishing peers that they continue to be inundated by either putting new policies & regulations in place or revising them ⚖️ Therefore, we decided to review the current state of affairs to mindfully guide our next steps💡

➡️ We review the global AI regulations broadly, followed by the specifics in the scholarly publishing industry in the context of the: authors, publishers, journals, editors, libraries, higher education, & more!

🧭 How it all started –

🌟 The European Union (EU) has been at the forefront of leading regulation in the AI domain ⛔ & the EU Artificial Intelligence Act (AIA) is setting the current standards ⚖️ for all AI vendors to make their tools compliant for global sustainable usage 🌏

🗺️ The United (States) Stand –

🗓️ Since last year, there have been several developments in the US:

➡️ The blueprint for an AI bill of rights from the Office of Science and Technology (OSTP)

➡️ The AI Risk Management Framework from the National Institute of Standards & Technology (NIST)

➡️ The National Artificial Intelligence Research Resource Pilot (NAIRR) from the U.S. National Science Foundation (NSF).

➡️ The establishment of the U.S. AI Safety Institute (USAISI) & the supporting USAISI Consortium with >200 member organizations.

🌏 The Global Stand –

The OECD.AI policy observatory offers a comprehensive repository of >1000 AI policy initiatives from 69 countries 🌏

💭 Responsible Tech – A Continued Dream or a Reality in the Making?

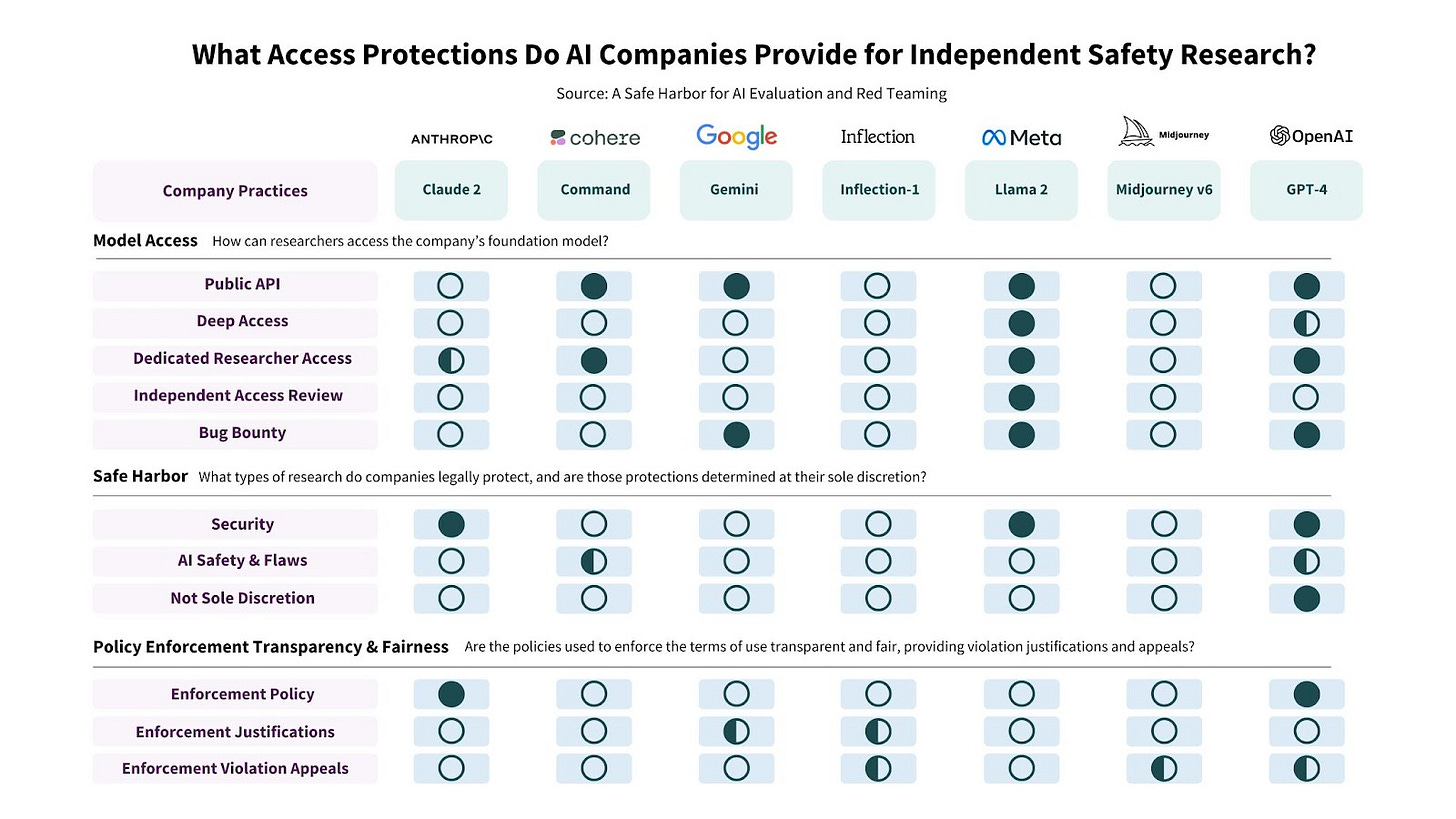

Self-audit is not unbiased & transparent 🤔 but legal protections for independent evaluation of generative AI risks is currently a dream. 🌟To make this dream a reality, responsible generative AI researchers recommend an independent safe harbor for AI evaluation & red teaming. ✒️Sign on the open letter, already signed by >100 leading researchers, journalists, & advocates, if it appeals to you.

🆕 The Evolving Gen AI Policies in Scholarly Publishing –

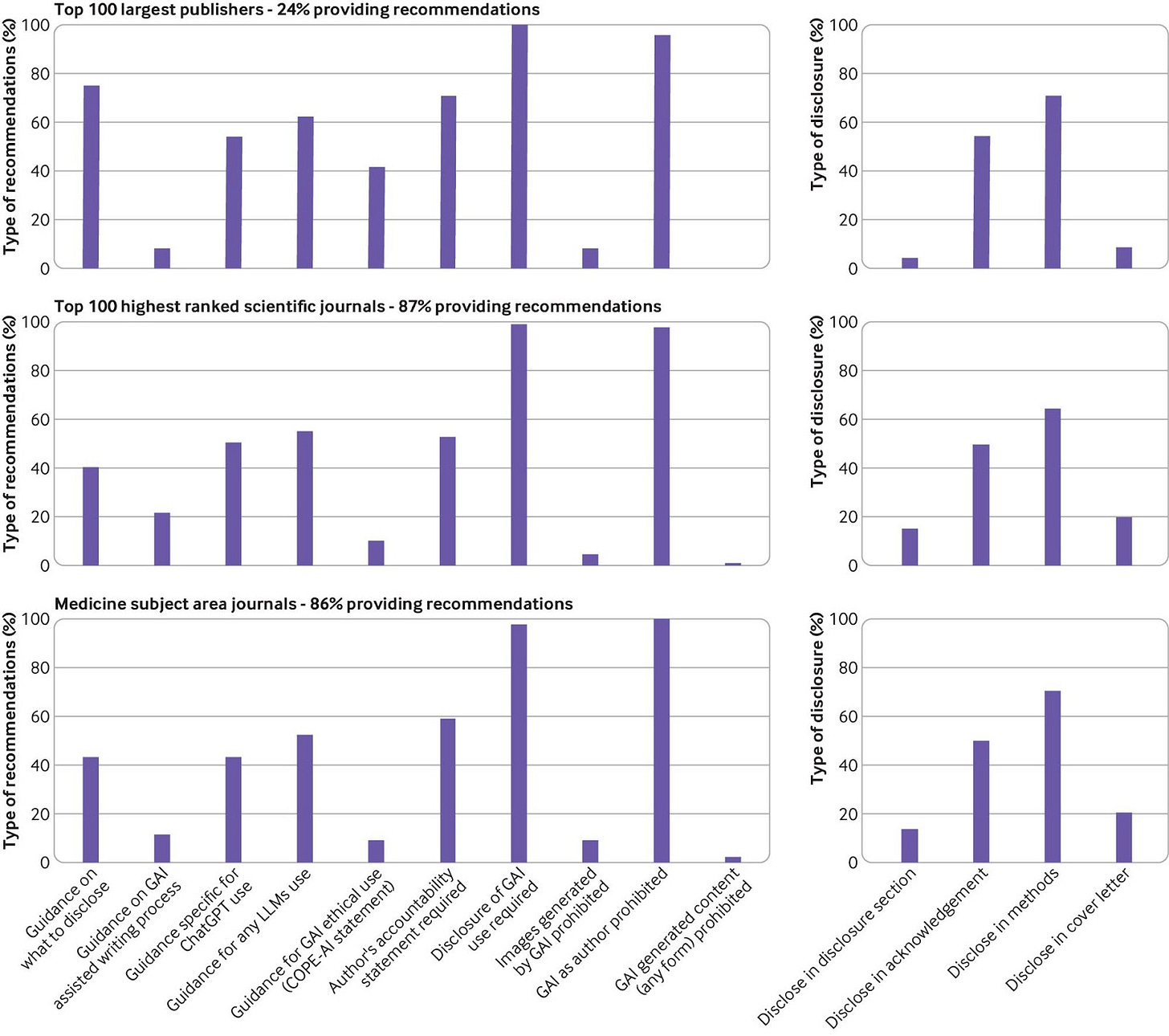

📢 A recent BMJ publication provides the most comprehensive analysis of generative AI use guidelines for authors across top 100 largest academic publishers & top 100 highly ranked scientific journals.

⬇️ Below, we break down the current policies from select publishers, journals, editors, libraries, & such to help you explore the current policy landscape in our industry to build upon 🏗️

The Publisher’s Stand –

Elsevier -

📈 AI tools should only enhance readability, not replace critical authoring tasks. 📢 Authors must disclose AI use, avoid attributing authorship to AI, & adhere to Elsevier's ethics policy. ⛔ Restrictions apply to image manipulation.

The Journals’ Stand –

Nature -

⛔ LLMs cannot be listed as an author, use of a large language model (LLM) by an author should be properly documented in the Methods section 🛑 Peer reviewers are encouraged not to use an LLM due to lack of up-to-date knowledge, as they may produce nonsensical, biased or false information, & to protect the sensitive or proprietary information in manuscripts.

Science -

⛔ Does not allow AI-generated text, figures, or images.

JAMA -

☑️ Requires disclosure of AI use in manuscripts ⚠️caution against improper AI use in peer review 📈though AI promises publishing efficiencies ➡️JAMA stresses responsible AI use as biases & ethical issues remain inadequately addressed.

Plos One -

☑️ Allows the use of AI tools.

NEJM AI -

✅ Permits the use of LLMs in scientific writing with proper acknowledgment & author accountability 📈 LLMs enhance scientific work but must not be listed as coauthors ⛔

The Editors’ Stand –

Consistent with the stand taken by the Committee on Publishing Ethics (COPE), editors of Ethics & Human Research; Accountability in Research; Hastings Center Report; Ethics & Human Research; Bioethics; Developing World Bioethics; Developing World Bioethics recommend ⛔ against using LLMs as authors, 🔎focus on author & editorial transparency with the use of generative AI, 👀 editorial oversight in peer review, & 😇 reliance on human authors & editors.

The Libraries’ Stand –

American Libraries interviewed 5 technology experts, educators, & librarians who leverage generative AI at their institutions to understand its current use in libraries, the underlying ethical concerns, & the future course of action for responsible adoption.

Higher Education Stand –

Last summer, Scribber analyzed data from top 100 universities & reported a lack of clear guidance at 27% of surveyed universities. The majority (51%) of the universities allowed the individual instructor to make the decision, whereas only 4% allowed use with citation, unless disallowed by the instructors. 18% of the universities banned the use by default though allowed use under permission from individual instructors. Please review the list of individual universities in this survey for updated policies.

Source: https://www.scribbr.com/ai-tools/chatgpt-university-policies/

And Some “More” Stands:

International Society for Medical Publication Professionals (ISMPP) position statement & call to action on artificial intelligence: ISMPP urges Medical Publication & Communication professionals to commit to: Appropriate AI Utilization, Maintaining Confidentiality, Driving Accountability, Appropriate Disclosure, Ensuring Responsibility, Data Transparency, Eliminating Bias, Enhancing Accessibility, Respecting the Academic Integrity of AI, Educating about AI, & Addressing AI Misconceptions

The International Committee of Medical Journal Editors (ICMJE) updated the recommendations for the Conduct, Reporting, Editing, & Publication of Scholarly Work in Medical Journals.

The Public Relations Society of America (PRSA) Board of Ethics and Professional Standards (BEPS) guides the ethical use of AI for public relations practitioners.

➡️ tldr; the Bottomline:

➡️ The guidelines on appropriate generative AI use by authors remain inconsistent 😑

➡️ Varying policies on disclosure & allowable uses burden authors and risk ineffectiveness 😏

➡️ Standardized guidelines will be needed to protect scientific integrity❗

📢 Bonus Shout-Out:

ICYMO the engaging Copyright Clearance Center’s recent Town Hall exploring the intersection of AI & copyright ⚖️, you may want to visit their resource center to join the conversation.

Poll Double Header

🔎Avi’s AI Tool Spotlight:

Perplexity AI is a conversational search engine that answers queries using natural language predictive text. Perplexity generates answers using the sources from the web & cites links within the text response. Perplexity’s model is based on OpenAI's GPT-3.5 model combined with the company's standalone LLM that incorporates natural language processing (NLP) capabilities.

Quick Summaries

Turnitin laid off staff earlier this year, after CEO forecast AI would allow it to cut headcount: True to the words of the Turnitin CEO Chris Caren from last year that the company will leverage AI to reduce 20% of the workforce, the slow premeditated process of layoffs has now begun. It’s the engineering jobs on line for now (to be followed by sales & marketing), with a focus on eventually replacing more senior personnel with college degrees with new talent coming out from high schools.

Klarna CEO says AI can do the job of 700 workers. But job replacement isn't the biggest issue: The fintech company Klarna is transparent about how it is leveraging AI to improve workflow efficiencies as well as customer satisfaction, which will ultimately result in replacing human workers. The company stopped hiring 6 months ago & is apparently shrinking naturally by “attrition!”

OpenAI hit with new lawsuits from news outlets over AI training: News outlets including The Intercept, Raw Story, & AlterNet sue OpenAI, alleging misuse of copyrighted articles to train ChatGPT. Lawsuits seek damages & injunctions, highlighting broader concerns over AI's use of copyrighted material. OpenAI faces growing legal scrutiny amid claims of copyright infringement.

The EU Artificial Intelligence Act: Up-to-date developments and analyses of the EU AI Act: The website for EU AIA describes in detail the AI act, has a compliance checker to guide vendors in developing sustainable tools that would be usable globally, & has a link to sign up for the newsletter to continue to gather biweekly updates on the new developments & analyses of the act itself.

Blueprint For An AI Bill Of Rights: Making Automated Systems Work For The American People: The White House Office of Science and Technology Policy (OSTP) has identified 5 principles to guide the design, use, & deployment of automated systems to protect the American public in the age of AI. The five principles are: Safe & Effective Systems, Algorithmic Discrimination Protections, Data Privacy, Notice & Explanation, & Human Alternatives, Consideration, & Fallback.

AI Risk Management Framework: The framework of the National Institute of Standards & Technology (NIST) is intended to be voluntary, rights-preserving, non-sector-specific, & use-case agnostic, providing flexibility for organizations of all sizes & sectors to implement the outlined approaches.

National Artificial Intelligence Research Resource Pilot: The NAIRR pilot will run for 2 years, beginning January 24, 2024. The pilot will broadly support fundamental, translational, & use-inspired AI-related research with particular emphasis on societal challenges. Initial priority topics include safe, secure, & trustworthy AI; human health; & environment & infrastructure.

U.S. Artificial Intelligence Safety Institute (USAISI): NIST established the USAISI & the supporting USAISI consortium of >200 member organizations with the aim to develop empirically backed scientific guidelines & standards for overall global AI safety.

National AI policies & strategies: In a user-friendly approach, the OECD.AI policy observatory makes it convenient to search for >1000 AI policies from 69 countries, territories, & the EU. Besides filtering by region, users can filter info by policy instruments & target groups.

A Safe Harbor for AI Evaluation & Red Teaming: Responsible AI researchers studying generative AI systems studied the policies of 7 developers of generative AI models & recommended a commitment from the major AI developers for legal & technical safe harbor. The goal is to protect public interest safety research against legal reprisal. In this LinkedIn post, Kevin K. thoughtfully lists the concerns pertaining to independent safety research.

Source: https://knightcolumbia.org/blog/a-safe-harbor-for-ai-evaluation-and-red-teaming

Publishers’ and journals’ instructions to authors on use of generative artificial intelligence in academic & scientific publishing: bibliometric analysis: Study reveals that 24% of the top 100 largest publishers offered guidance on the use of generative AI & 87% of the top highest ranked scientific journals & 86% of medicine subject area journals provided recommendations.

Source: https://www.bmj.com/content/384/bmj-2023-077192

Generative AI in Higher Education - The Product Landscape: A new report from Ithaka S+R by

Claire Baytas & Dylan Ruediger may help navigate the crowded generative AI (GAI) marketplace. The Product Tracker cataloged >100 GAI applications to aid informed decisions. Products that may assist in discovery, understanding, & content creation phases may revolutionize higher education workflows.

🌎 Sustainability reads ♻️

The Scariest Part About Artificial Intelligence: AI poses serious threats to our sustainable future due to its excessive water & energy consumption, contribution to e-waste, & reliance on critical minerals.

AI likely to increase energy use & accelerate climate misinformation – report: Environmental groups caution against overestimating AI's role in combating climate change. While touted as a solution, AI may actually worsen the crisis by driving up energy consumption & spreading climate disinformation.

The Obscene Energy Demands of A.I.: To respond to the estimated 200 million requests/day, Chat-GPT may be consuming >0.5 million kilowatt-hours of electricity. The average U.S. household consumes 29 kilowatt-hours/day! YIKES!

🗓️ Upcoming March AI Events:

➡️ AI and Beyond: A vision for the future of publishing technology; FREE webinar for ALPSP members ONLY on March 26

➡️ Generative AI’s Impact on Authoring Medical Research; FREE webinar by SAIH on March 26

Feedback Corner

If you enjoyed reading the newsletter, sign up or share with someone who may be interested!

Thanks for reading! Your feedback is important for us to cater to your scholarly needs:

Reader’s Sounding Board

Please share your thoughts, suggestions, and comments: augmentscholpub@gmail.com for us to share with our audience.

➡️ What policies are you using in your scholarly publishing domain to handle the use of AI in your specific context?

Until next newsletter,

Chhavi Chauhan and Chirag Jay Patel